Days after New Hampshire voters received a robocall with an artificial voice resembling President Joe Biden’s, the Federal Communications Commission has banned the use of artificial intelligence-generated voices in robocalls.

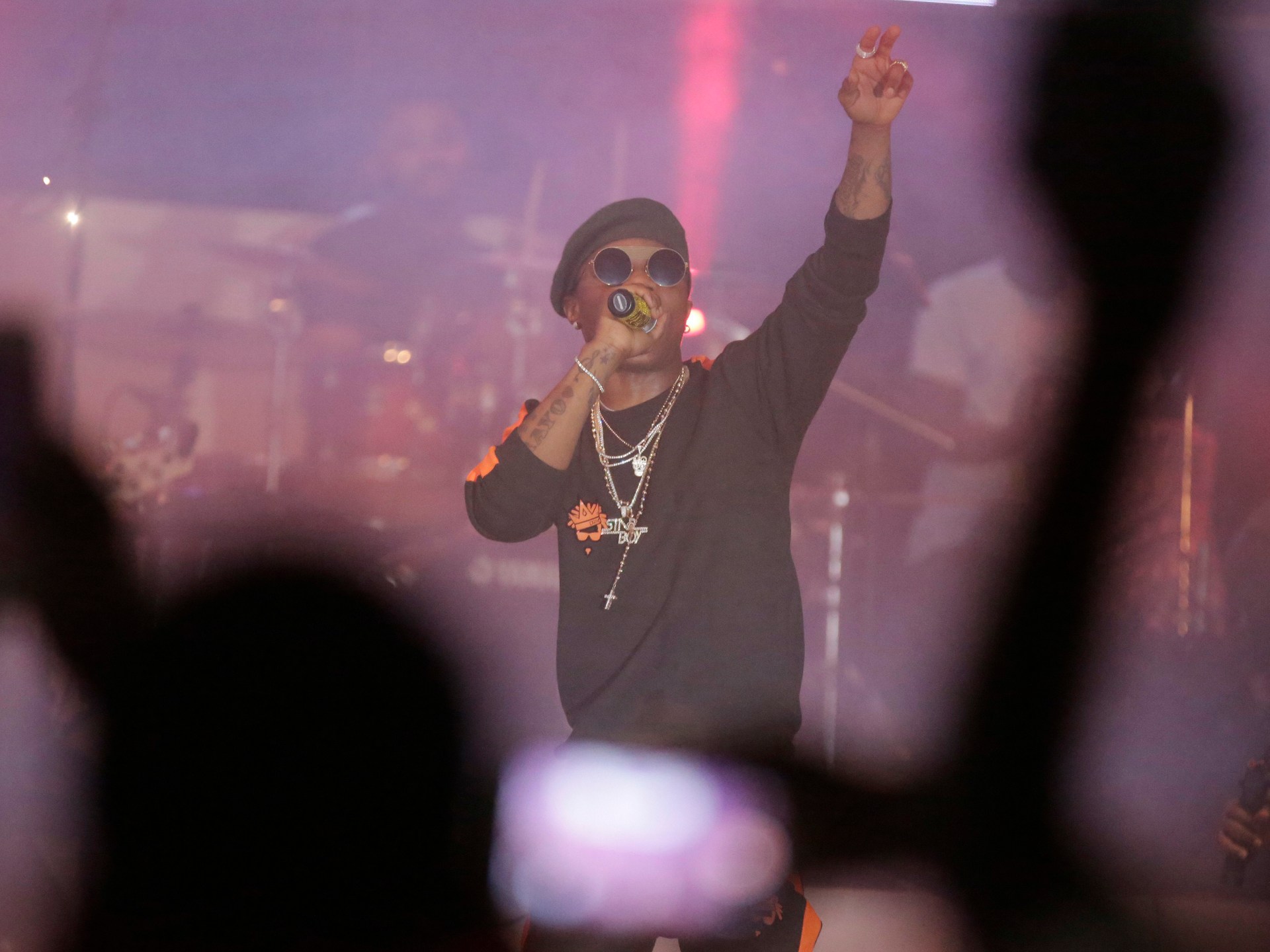

It was a flashpoint. The 2024 US election will be the first to unfold amid widespread public access to artificial intelligence generators, which allow people to create images, audio and video — some for nefarious purposes.

Organizations have been quick to limit wrongdoing enabled by artificial intelligence.

Sixteen states have enacted legislation around the use of artificial intelligence in elections and campaigns; Many of these states required disclaimers in synthetic media posted close to elections. The Election Assistance Commission, a federal agency that supports election officials, has published an “AI Toolkit” containing tips election officials can use to communicate elections in the age of fabricated information. States have published their own pages to help voters identify AI-generated content.

Experts to caution About the ability of artificial intelligence to create fake photos that make candidates appear to be saying or doing things they did not do. Experts said the influence of artificial intelligence could harm the United States both domestically — misleading voters, influencing their decision-making or deterring them from voting — and abroad, benefiting foreign adversaries.

But the expected torrent of AI-driven disinformation never materialized. As Election Day came and went, viral misinformation played a major role, misleading about vote counts, mail-in ballots, and voting machines. However, this deception has largely relied on old and familiar techniques, including social media claims based on text, videos or images out of context.

“It turns out that using generative AI is not necessary to mislead voters,” said Paul Barrett, deputy director of the Stern Center for Business and Human Rights at New York University. “This was not an AI election.”

Daniel Schiff, an assistant professor of technology policy at Purdue University, said there was no “massive eleventh-hour campaign” that misled voters about polling places and affected turnout. “This type of misinformation was smaller in scale and unlikely to be the deciding factor in the presidential election, at least,” he said.

Experts said the AI-generated claims that received the most attention supported existing narratives rather than making up new claims to deceive people. For example, after former President Donald Trump and his running mate, J.D. Vance, falsely claimed that Haitians were eating pets in Springfieldohio, Artificial intelligence images Memes depicting animal abuse flooded the Internet.

Meanwhile, technology and public policy experts said safeguards and legislation have reduced AI’s ability to create harmful political speech.

Schiff said the potential damage AI could cause in elections has sparked “urgent energy” focused on finding solutions.

“I think the great interest shown by public advocates, government agencies, researchers and the general public has been very significant,” Schiff said.

deadwhich owns Facebook, Instagram and Threads, has required advertisers to disclose the use of artificial intelligence in any ads about politics or social issues. TikTok has implemented a mechanism to automatically classify some AI-generated content. OpenAI, the company behind ChatGPT and DALL-E, has banned the use of its services in political campaigns and banned users from creating images of real people.

Disinformation actors used traditional techniques

AI’s ability to influence elections has also diminished because there are other ways to get that influence, said Siwei Liu, a professor of computer science and engineering at the University at Buffalo and a digital media forensics expert.

“In this election, the impact of AI may seem weak because traditional formats were still more effective, and on social networking platforms like Instagram, accounts with large followings are using AI less,” said Herbert Zhang, assistant professor of quantitative social sciences at Dartmouth College. “. . Zhang co-wrote a study that found that AI-generated images “generate less virality than traditional memes,” but that AI-generated memes also generate virality.

High profile people with large followings easily spread messages without the need for AI-generated media. For example, Trump has repeatedly falsely said in his speeches, media interviews and on social media that illegal immigrants exist Brought to the United States to vote Although instances of non-citizen voting are extremely rare and citizenship is required to vote in federal elections. Polls have shown that Trump’s oft-repeated claim has borne fruit: More than half of Americans said in October they were concerned about noncitizens voting in the 2024 election.

PolitiFact’s fact-checks and stories on election-related misinformation have highlighted some images and videos that used AI, but much of the viral media was what experts call “cheap fakes” — original content that was deceptively edited without AI.

In other cases, politicians have flipped the script, blaming or belittling AI rather than using it. For example, Trump falsely claimed that a montage of his gaffes published by the Lincoln Project was created by artificial intelligence, and said that a mob of Harris supporters was created by artificial intelligence. After CNN published a report that North Carolina Lt. Gov Mark Robinson He made offensive comments on a porn forum, and Robinson claimed he was an artificial intelligence. One expert told WFMY-TV in Greensboro, North Carolina, that what Robinson claimed would be “almost impossible.”

Artificial intelligence used to stir up ‘partisan hostility’

Authorities discovered that a New Orleans street magician created a fake call to Biden in January, in which the president could be heard discouraging people from voting in the New Hampshire primary. The magician said it took just 20 minutes and $1 to create the fake sound.

The political consultant who hired the magician to make the call faces a $6 million fine and 13 felony charges.

It was a special moment, partly because it has not been repeated.

AI was not the driver behind the spread of two major misleading narratives in the weeks leading up to Election Day — fabricated claims about eating pets and Lies Bruce Schneier, an associate lecturer in public policy at Harvard Kennedy School, said FEMA’s relief efforts in the wake of Hurricanes Milton and Helen.

“We have seen deepfakes being used to seemingly effectively stir up partisan animosity, helping to establish or entrench some misleading or false views toward candidates,” Daniel Schiff said.

He worked with Kaylyn Schiff, an assistant professor of political science at Purdue University, and Christina Walker, a doctoral candidate at Purdue University, to create a database of political deepfakes.

The data showed that the majority of deepfake incidents were created as satire. Behind this were deep fakes aimed at damaging someone’s reputation. The third is the most common deepfakes created for entertainment.

Daniel Schiff said deepfakes that criticized or misled people about the candidates were “extensions of traditional American political narratives,” such as those that portray Harris as a communist or a clown, or Trump as a fascist or criminal. Chang agreed with Daniel Schiff, saying that generative AI “has exacerbated existing political divisions, not necessarily with the intent to mislead but through exaggeration.”

Major foreign influence operations have relied on actors, not artificial intelligence

Researchers warn in 2023 that artificial intelligence could help foreign adversaries conduct influence operations faster and cheaper. The Center for Malicious Foreign Influence — which evaluates foreign influence activities targeting the United States — said in late September that artificial intelligence had not “revolutionized” those efforts.

In order to threaten the US election, foreign actors will have to overcome limitations imposed by artificial intelligence tools, evade detection and “strategically target and disseminate such content,” the center said.

Intelligence agencies — including the Office of the Director of National Intelligence, the FBI, and the Cybersecurity and Infrastructure Security Agency — have flagged foreign influence operations, but these efforts have often used actors in doctored videos. A video showed a woman who claimed Harris beat and injured her in a hit-and-run car accident. The video’s narration was “entirely fabricated,” but not the artificial intelligence. Analysts linked the video to a Russian network they called Storm-1516, which used similar tactics in videos that sought to undermine confidence in elections in Pennsylvania and Georgia.

Statute safeguards and state legislation have likely helped reduce the “worst behaviour.”

Social media and AI platforms have sought to make it more difficult for their tools to be used to spread harmful political content, by adding watermarks, labels and fact-checking to claims.

Meta AI and OpenAI said their tools rejected hundreds of thousands of requests to create AI images of Trump, Biden, Harris, Vance and Democratic vice presidential candidate Tim Walz. In a December 3 report on global elections in 2024, Meta’s head of global affairs, Nick Clegg, said that “assessments on AI content related to elections, politics and social topics account for less than 1 percent of all misinformation verified.” “From her.”

However, there were shortcomings.

When prompted, ChatGPT still wrote campaign messages targeting specific voters, the Washington Post found. PolitiFact also found that Meta AI easily produced images that could support the narrative that Haitians were eating pets.

Daniel Schiff said the platforms have a long way to go as AI technology improves. But at least in 2024, the precautions they took and the states’ legislative efforts appear to have paid off.

“I think strategies like deepfake detection, efforts to raise public awareness, as well as outright bans, are all important,” Schiff said.

https://www.aljazeera.com/wp-content/uploads/2024/09/AFP__20190128__1CN3NT__v1__HighRes__UsItMediaPolitics-1725635887.jpg?resize=1920%2C1440

2024-12-25 09:15:00